Table of Contents:

- Where is the robots.txt file stored?

- The robots.txt file and directives

- User-agent

- Disallow

- Allow

- Crawl-delay

- Sitemap

- And what about slashe and asterisk?

- Robots.txt file in conclusion

Last updated December 6th, 2023 05:46

The robots.txt file is one of the most important files on a website. This file serves as a guide for search engine crawlers and robots, which crawl through web pages and index them for search results. Without a robots.txt file, crawlers would have to go through the entire website, which can cause unnecessary server load and slow down page loading times. Additionally, this may not always be desirable.

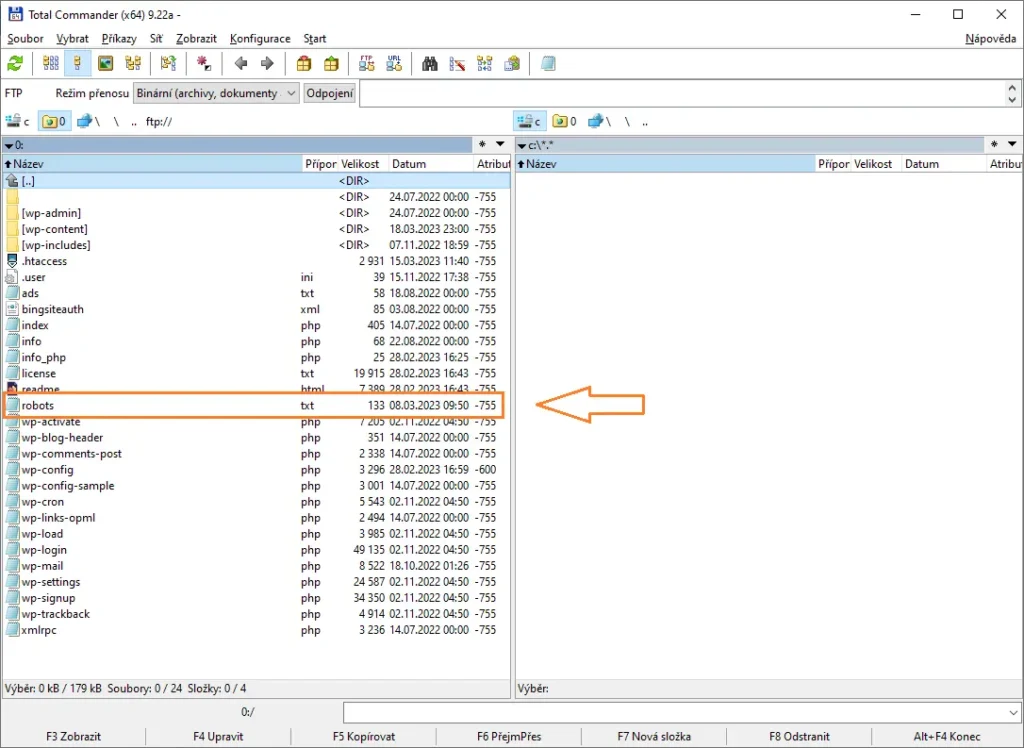

Where is the robots.txt file stored?

The robots.txt file is stored in the root directory of a website, and robots automatically look for it there. For example, the robots.txt file for the website www.my-domain.com would be available at www.my-domain.com/robots.txt.

The robots.txt file and directives

Robots.txt contains several different directives that tell robots how to navigate pages and what to index, or prohibit access to certain folders or areas on the website. Some of the most commonly used directives include:

User-agent

This directive specifies a particular robot or group of robots to which the directive will apply. For example: User-agent: Googlebot. Such a rule will determine the options for Google’s robot.

Disallow

This directive tells robots which pages or directories to ignore and not scan. For example: Disallow: /admin/ will prevent robots from browsing the admin directory. It should be noted that robots may not always adhere to such rules unconditionally. It depends on the behavior of the particular robot whether it will follow such rules or not.

Allow

This directive specifies particular directories or pages that are allowed to be crawled by robots. It is usually used with Disallow. For example: Allow: /blog/ will allow robots to browse the blog directory that was previously prohibited by Disallow. Let me explain further. Suppose we have a website with the following directory structure:

- /blog/

- /products/

- /contact/

If we want to allow robots to browse the “blog” directory but deny them access to the “products” directory, the robots.txt file may look like this:

User-agent: *

Disallow: /produkty/

Allow: /blog/

Crawl-delay

This directive determines the time delay between individual requests of a robot. It is used to limit server load. For example, Crawl-delay: 5 will specify a 5-second delay between robot requests.

User-agent: *

Crawl-delay: 5

Disallow: /admin/

Disallow: /soukromi/

In this example, the directive ‘User-agent: *’ again refers to all robots. The ‘Crawl-delay: 5’ directive specifies a 5-second pause between robot queries to the website. The ‘Disallow’ directives restrict robots’ access to directories ‘/admin/‘ and ‘/privacy/‘.

Sitemap

This directive specifies the location of the sitemap file for the website. The sitemap contains a list of all pages on the website and helps robots with indexing. For example: Sitemap: http://www.my-domain.com/sitemap.xml.

User-agent: *

Disallow: /admin/

Disallow: /soukromi/

Sitemap: https://www.moje-domena.cz/sitemap.xml

And what about slashe and asterisk?

Slash and asterisk are important characters in the robots.txt file. The slash is used to separate directories and pages. For example, if we want to deny robots access to a directory with images, we use the Disallow directive with the directory address. For instance, Disallow: /images/. On the other hand, the asterisk is used as a wildcard for any string. For example, Disallow: /*.pdf will block robots from crawling any PDF file on the site.

Robots.txt file in conclusion

The effective management of websites largely depends on the use of the robots.txt file, which plays a critical role in controlling the indexing and displaying of pages in search engine results. If you aim to take full control over how robots traverse your website, it is essential to learn the correct way to configure and use the robots.txt file. By effectively using the robots.txt file, you can actively monitor and regulate the behavior of robots on your website, which can significantly impact the correct indexing and visibility of your pages in search engine results.

The website is created with care for the included information. I strive to provide high-quality and useful content that helps or inspires others. If you are satisfied with my work and would like to support me, you can do so through simple options.

Byl pro Vás tento článek užitečný?

Klikni na počet hvězd pro hlasování.

Průměrné hodnocení. 0 / 5. Počet hlasování: 0

Zatím nehodnoceno! Buďte první

Je mi líto, že pro Vás nebyl článek užitečný.

Jak mohu vylepšit článek?

Řekněte mi, jak jej mohu zlepšit.

Subscribe to the Newsletter

Stay informed! Join our newsletter subscription and be the first to receive the latest information directly to your email inbox. Follow updates, exclusive events, and inspiring content, all delivered straight to your email.

Are you interested in the WordPress content management system? Then you’ll definitely be interested in its security as well. Below, you’ll find a complete WordPress security guide available for free.